The BOM

Temperature records in Australia are kept by the Australian Bureau of Meteorology, commonly abbreviated the BOM. They provide a range of invaluable weather services like daily temperature forecasts, rain forecasts and storm warnings.

Click here to visit the BOM's web site.

One of my favourite features is the rain radar. Before a storm it’s possible to see the direction and intensity of rain in real time.

This picture shows a rain band extending from Cape Liptrap in Victoria, across Bass Strait, to an area between St. Helens and Launceston in Tasmania.

There’s also scraps of rain off Ulladulla and Sydney in southern New South Wales.

The BOM’s is a vital source of weather information for all Australians.

Climate data and controversy

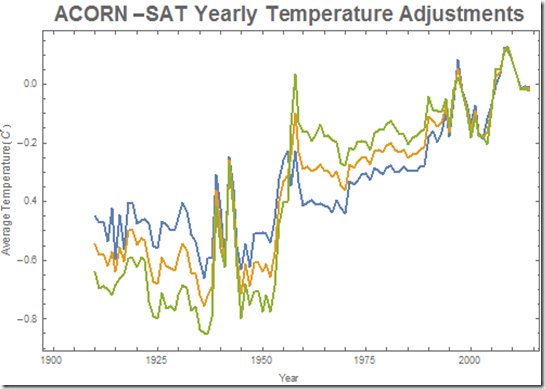

Believe is or not, the BOM has recently been embroiled in a controversy and has been the subject of a government inquiry. The Australian featured the story below of how the ‘homogenised’ temperature records and other adjustments made by the Bureau appeared to have been done to support the hypothesis of human-induced global warming.

Bureau of Meteorology ‘altering climate figures’

Environment Editor

Sydney

Researcher Jennifer Marohasy has claimed the Bureau of Meteorology’s adjusted temperature records resemble ‘propaganda’ rather than science. Source: News Corp Australia

THE Bureau of Meteorology has been accused of manipulating historic temperature records to fit a predetermined view of global warming.

Researcher Jennifer Marohasy claims the adjusted records resemble “propaganda” rather than science.

Dr Marohasy has analysed the raw data from dozens of locations across Australia and matched it against the new data used by BOM showing that temperatures were progressively warming.

In many cases, Dr Marohasy said, temperature trends had changed from slight cooling to dramatic warming over 100 years.

BOM has rejected Dr Marohasy’s claims and said the agency had used world’s best practice and a peer reviewed process to modify the physical temperature records that had been recorded at weather stations across the country.

It said data from a selection of weather stations underwent a process known as “homogenisation” to correct for anomalies. It was “very unlikely” that data homogenisation impacted on the empirical outlooks.

In a statement to The Weekend Australian BOM said the bulk of the scientific literature did not support the view that data homogenisation resulted in “diminished physical veracity in any particular climate data set’’.

Historical data was homogenised to account for a wide range of non-climate related influences such as the type of instrument used, choice of calibration or enclosure and where it was located.

“All of these elements are subject to change over a period of 100 years, and such non-climate related changes need to be accounted for in the data for reliable analysis and monitoring of trends,’’ BOM said.

Account is also taken of temperature recordings from nearby stations. It took “a great deal of care with the climate record, and understands the importance of scientific integrity”.

Dr Marohasy said she had found examples where there had been no change in instrumentation or siting and no inconsistency with nearby stations but there had been a dramatic change in temperature trend towards warming after homogenisation.

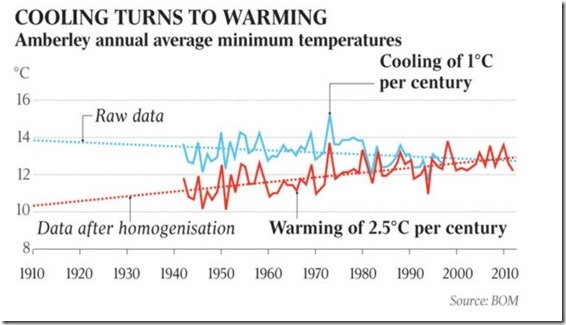

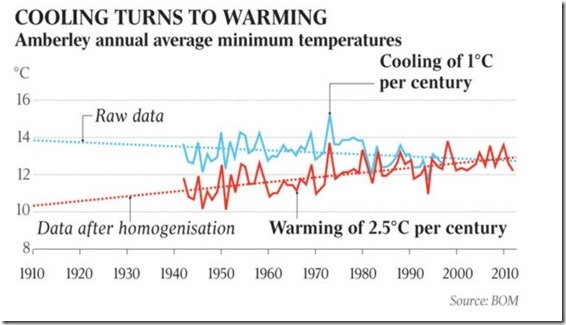

She said that at Amberley in Queensland, homogenisation had resulted in a change in the temperature trend from one of cooling to dramatic warming.

She calculated homogenisation had changed a cooling trend in the minimum temperature of 1C per century at Amberley into a warming trend of 2.5C. This was despite there being no change in location or instrumentation.

BOM said the adjustment to the minimums at Amberley was identified through “neighbour comparisons”. It said the level of confidence was very high because of the large number of stations in the region. There were examples where homogenisation had resulted in a weaker warming trend.

You can visit Dr. Marohasy's web site by clicking here.

The BOM under investigation

In January 2015 the Parliamentary Secretary for the Environment, Bob Baldwin, appointed a Technical Advisory Forum on Climate Records to review the Bureau’s practices. You can see Mr. Baldwin's press release, the forum's terms of reference and its membership by clicking here.

The Forum delivered their report in June 2015. It was not very startling, but they did make several recommendations.

These are, in brief:

- Improve communications about climate data, specifically uncertainties, statistical methods and adjustments.

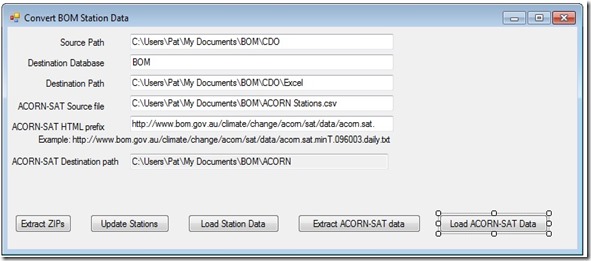

- Improve accessibility to both raw and adjusted climate data. I’ll have more to say about this in my next post where I’ll detail the hoops I had to jump through to access the data.

- Improve the statistical methods used in determining which records require adjusting.

- Improve the handling of metadata. Metadata includes non-climate data like the history of movements of weather stations or instrumental changes.

- Expand the range of data included. The BOM does not include data prior to 1910. In fact it has records dating back to 1855. The 1890s were particularly hot in Australia. A cynical, sceptical person might say the older records have been excluded because they make a nonsense of statements of recent years being the ‘hottest on record’.

The BOM has been accused of other tampering:

Cyclone Marcia made landfall near Rockhampton Queensland in February 2015. The Bureau claimed in its press release that Marcia had reached Category 5. A Category 5 cyclone corresponds to a Category 12 hurricane on the Beaufort Scale used in other parts of the world. You can read about the Bureau's Categories here.

The highest wind speed recorded of 208 kilometres per hour and the lowest measured barometric pressure of 972-975 hectopascals means the cyclone was of Category 2. Still serious, still dangerous, but not out of the ordinary.

The Bureau appeared to be more interested in scary headlines than accuracy.

Just last week, a Bureau representative claimed ““We’ve never had a July tropical cyclone in the Queensland region before.” when quizzed about a weak cyclone forming near the Solomon Islands. The bureau representative ‘forgot’ about July cyclones in 1935, 1954 and 1962.

What has driven me to look at the Bureau’s data myself is a claim by Lance Pidgeon that the Bureau reports that the highest maximum temperature in Eucla on the the Nullarbor in South Australia for the month of December 1931 was less than 36 degrees Celsius, but the average was more than 36 degrees. This is, of course, a mathematical impossibility.

So, I’ve embarked on a project to download the various BOM databases, examine both the raw and adjusted records and come to my own conclusions about the Bureau’s processes.

My experience so far? While the data appears to be available, it’s in a form designed to confuse rather than enlighten. I’ll deal with some details in my next post.

An organisation like the Australian Bureau of Meteorology performs vital services for Australians. Its value is, of course, highly dependant on its trustworthiness.

For example, if it were to issue flood warnings every time it predicted rain, people would soon ignore flood warnings, with potentially lethal consequences.

We understand predicting the weather is an inexact science and are tolerant, if somewhat scathing, when a prediction turns out to be wrong.

There appears to be some evidence that the Bureau is misusing its position of trust to exaggerate climate events like temperature rise and severe storms to support the political, not scientific, dangerous global warming agenda.

Even ten year ago, the idea of a government inquiry into an organisation like the Bureau of Meteorology would have been unthinkable. Even more unthinkable would be the idea of the Bureau ‘adjusting’ temperature records to support a political cause.

Unfortunately, the unthinkable has become cause for grave concern.