Well ‘O’ week is all in the past. It’s time to crack the books or whatever it is you do with a MOOC.

A MOOC is a Massive On-line Open Course or some such. This one’s rune by the famous John Cook AKA Mr. 97%.

Who better than a ‘cognitive psychologist’ to run a course about climate change.

Well, actually, it’s not about climate change at all. It’s about dealing with deniers.

The course is fairly well attended with over 1000 students (as at day 2, 53% in North America, 25% in Australia, 16% in Europe and rest in Asia, South America and Africa.

The topics this week are:

Consensus, Psychology of denial and Spread of denial.

There’s quizzes and a discussion forum.

The first lesson, Consensus was all about, well, consensus.

The first thing we had to do was answer the questions “How would you define scientific consensus? What wouldn't be considered consensus? How does a scientific consensus form?”

My I expect my answer “What does consensus have to do with science? Prior to 1905 the scientific consensus in physics would be that light travelled through a luminiferous aether.” would be pretty typical.

The first thing we were told is that there are lots of ‘fingerprints’ about climate change and that the only explanation that matches all of the is human induced greenhouse gasses. No question. It’s true and that’s all that needs to be said on the subject.

Then came a big, university style word ‘consilience’ which means all the climate scientists agree. Next we learned that we’re all too busy to think for ourselves, so we need to listen to the experts. And 97% of the experts all agree. Mr.Cook himself wrote one of the papers so that makes it particularly true.

A petition of 31,000 scientists in the US was debunked because they weren’t all climate scientists.

Oh and a sceptic is sort of the opposite of a denier and it’s OK to be a sceptic as long as you’re a climate scientist. Or a psychology student.

The next lecture was something about dentists recommending toothpaste, the peer review process, 97% again, and the dwindling number of scientists funded to study anything but the ‘CO2 is the devil’ brand of climate science.

Lectures are actually little five-minute long videos with lots of pretty graphics. If my university had worked that way I could have gotten my degree in about a day-and-a-half.

In The Psychology of Denial we learned that there’s a gear loose in the heads of conservatives that makes them deny climate stuff.

We even got to do a nifty psychological profile. I’m one of sixteen ‘Hierarchical, Individualistic’ people doing the course. Almost all of the remaining thousand or so are proper ‘Egalitarian Communitarians’.

It turns out the nasty deniers use FLICC to fool nice people. That’s not Australian fly spray, but a clever acronym meaning:

Each of these is a very bad thing done all the time by deniers and never by the 97%.

All this was followed by interviews with climate experts like historian Naomi Oreskes and psychologist Stephan Lewandowsky. Stephan became famous by discovering climate sceptics also believe the moon landing were faked. (I believe NASA in this case, just for the record.)

The week finished with an expose of how Exxon Mobile and Koch funded all sorts of secret organisations trying to spread climate denial.

The short story is that between 2005 and 2008 the bad guys spent $US8.45 million per year. By my count that 42 of the organisations got an average of $201,000 per year while the other 28 got nothing at all. By comparison, Greenpeace and The Nature Conservancy get more than $US1 billion per year in donations.

The little cartoon at the end of the lesson was mislabelled, I think.

The week finished with a quiz. I had three goes before I could get all the answers wrong. I’m trying for ‘low score’ in this course.

My final action was to participate in the discussion forum. One message caught my eye in particular.

This MOOC reviewed by skeptics

“At Judith Curry's blog (http://judithcurry.com/2015/04/28/making-nonsense-of-climate-denial/)

Curry is a fairly prominent atmospheric scientist (textbook author, for example) who is a critic of AGW activism, though certainly not a denier of climate science. But you will encounter some real fire-breathing deniers adding comments in the blog's forum section.”

I couldn’t help but reply:

“First, I strenuously object to the word 'denier'. I'm not a holocaust denier, I believe the moon landings happened as presented by NASA and I don't do what the voices tell me to do. I seldom breath fire.

I'm not aware of anyone who actually denies that there is more CO2 in the air than there was in 1957 when measuring at Mauna Loa began.

I also don't know anyone who denies that a molecule of CO2 will absorb photons in the visible light range and re-emit them in the infrared.

I haven't found one name of an actual 'denier' in any of the lectures or discussions.

I became interested in the global warming scare after studying chaos theory. Meteorologist Edwin Lorenz is generally credited with the initial development of this discipline and he was driven by curiosity about why synoptic forecasting was reasonably accurate while physical models had much poorer predictive skill.

His discovery that the climate was chaotic, that is highly sensitive to initial conditions, led him to the conclusion that predicting weather more than two weeks in advance was impossible.

I was was confused, therefore, when I read that prediction were being made about conditions hundreds or even thousands of years in the future. Yes, I understand the difference between weather and climate, but strongly believe Carl Sagan's (actually Pierre-Simon Laplace's) dictate that "Extraordinary claims require extraordinary evidence."

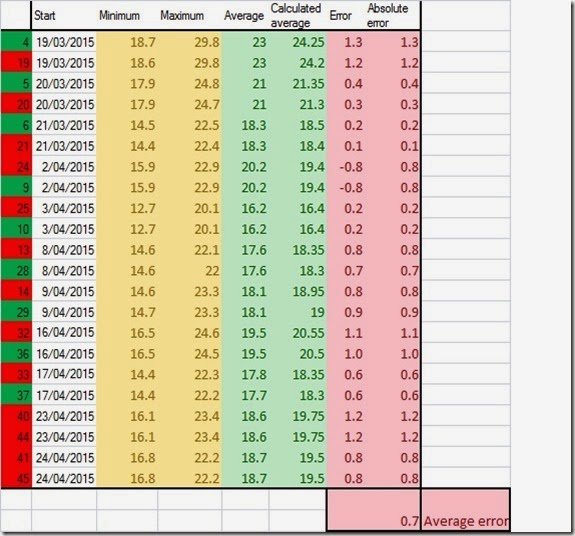

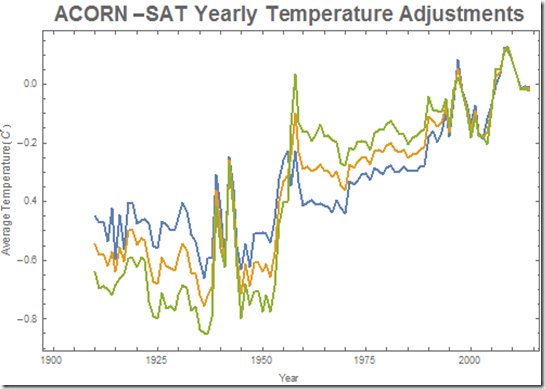

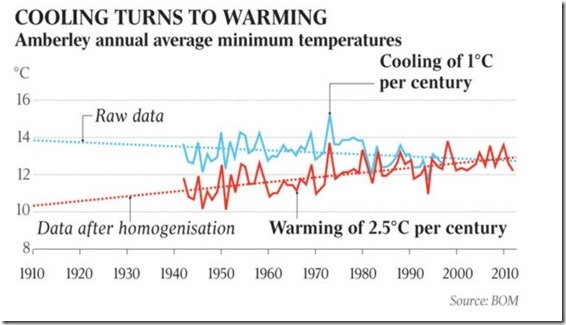

That scepticism remains with me today: In the IPCC's AR5 (Chapter2 page 193, Table 2.7) there's a table showing the rate of warming of the Earth between 1880 and 2012. The value attributed to the Hadley Climate Research Unit of the University of East Anglia is 0.062 plus or minus 0.012 degrees Celsius per decade.

We're asked (told) to accept that the rate of heating of the whole Earth, land and oceans, can be measured to within twelve ten-thousandths of a degree Celsius over a period of 132 years, when measuring is done with thermometers with an accuracy of about 0.2 degrees Celsius.

I'm sorry, I won't just accept the word of the 'experts'.

BTW news of this course also appeared on WUWT and in Australia on Jo Nova and Andrew Bolt”

More next week. I’m not sure I can stand six more weeks of this.

The screen is located 1.2 – 2.0 meters above the ground. The screen keeps direct sunlight from falling directly on the thermometer. Direct sunlight is just one of the multitude of things that can effect and distort temperature readings.

The screen is located 1.2 – 2.0 meters above the ground. The screen keeps direct sunlight from falling directly on the thermometer. Direct sunlight is just one of the multitude of things that can effect and distort temperature readings.