Getting ACORN-SAT data from the BOM

In my last post I went through, in some technical detail, how I extracted the Climate Data Online (CDO) data from the Australian Bureau of Meteorology (BOM). Click here to access the BOM CDO data pages.

Today I’ll go through the same process with the Australian Climate Observation Reference Network – Surface Air Temperature dataset.

If you remember, I set out to look at the differences between the raw data (CDO) and the adjusted data (ACORN-SAT) that have generated considerable controversy and a government inquiry into the behaviour of the BOM.

Click here to access BOM's ACORN-SAT page.

A quick look at the ACORN-SAT page made my antennae twitch:

“ACORN-SAT is a complete re-analysis of the Australian homogenised temperature database.”

I’m hoping that’s not BOM-speak for “the previous temperature database didn’t support our view on global warming so we ‘re-analysed’ it”.

I also noticed that no station data prior to 1910 are available. Remember last time, I mentioned the hot 1890s? But I’m getting ahead of myself.

The page has a link for a “Sortable list of ACORN-SAT stations with linked data. Through a process called ‘screen scraping’ I was able to just select all of the entries in the table, drop it into Excel, save the worksheet and import it into SQL Server. For the next step I also save the spreadsheet as a CSV file to make it easier to access in my conversion program

So far, so good.

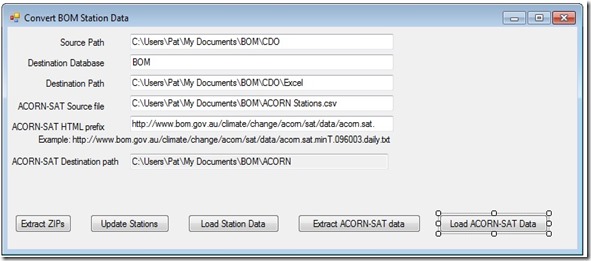

The next step was to add two more functions to my conversion program. The second button from the right reads the CSV version of the spreadsheet, looping through the stations. As the screenshot above shows, the minimum and maximum temperature data are hyperlinks. They’re actually hyperlinks to the text files containing the data.

My program uses the link to download the data and store it in a text file on my laptop.

The rightmost button loops through the 244 files I’ve downloaded, does some error checking, then stores the data in the SQL Server database. The data is a record for each station for each day of each year giving the minimum or maximum temperature.

There’s one odd thing I noticed right away. When no data is available for a particular station for a particular day, instead of leaving it out, the BOM has used a ‘dummy value of 99999.9 degrees Celsius. Talk about global warming! Imagine using that value in a calculation.

Just for fun, I calculated the average for station 10092 (Merredin, Western Australia) using the dummy value. I know WA is hot, but an average temperature if 1,634 degrees Celsius seems a bit excessive.

I know the value shouldn’t be actually used, but leaving the record out or using a standard value like NULL is preferable an removes the chance of an error like this creeping into a calculation.

Using NULL instead of 99999.9 gave the more realistic (and correct) average temperature of 24.8 degrees.

For readers unfamiliar with database technology, using the NULL value for calculating the average does three important things:

- It clearly marks the record as having missing data.

- When computing the sum, this record is skipped.

- When dividing the sum by the number of records, the number of records is adjusted as well.

Using a dummy number like the BOM has done is 1960s style data handing practice and should definitely be a no-no in 2015.

I’ve changed the 99999.9s to NULLs in my copy of the database.

I completed the initial database build and found 3,635,866 minimum temperature records of the expected 112 sites and 3,635,933 maximum temperature records for the same 112 sites.

Some of the initial questions that occur to me, that I intend to explore include:

- Why is the number maximum temperature records different from the number of minimum temperature records?

- Why were just these 112 sites out of a possible 1871 chosen?

- How different is each ACORN-SAT site from its CDO equivalent?

- Exactly what adjustments were made?

- How are the adjustment justified?

- How were the adjustments made?

- What are the uncertainties in these measurements?

- How different are the trends indicated by each dataset?

I will welcome any additional suggestions from anyone who happens to follow this blog. I’m also happy to make details of the source code and scripts used in the compilation of this database. I’m happy to make the database itself available more generally, subject to any copyright restrictions that may exist. I’ll look at that issue more closely.

In my next post I’ll report on my initial look at the data.

No comments:

Post a Comment

Got a comment for me?